Exploring Python Task Queue Libraries with Load Test

Task queues play a crucial role in managing and processing background jobs efficiently. Whether you need to handle asynchronous tasks, schedule periodic jobs, or manage a large volume of short-lived tasks, task queue libraries can help you achieve these goals.

In this post, I explore the most popular Python task queue libraries: Python-RQ, ARQ, Celery, Huey, and Dramatiq. I benchmarked their performance and evaluated their ease of use.

Update: [2025-11-01] added taskiq and procrastinate to the benchmark.

It’s worth to mention that these libraries are relatively new to me, as my daily job doesn’t involve much Python programming. However, I quickly learned and conducted this research within a day because they are all straightforward to set up for basic jobs.

The Benchmark

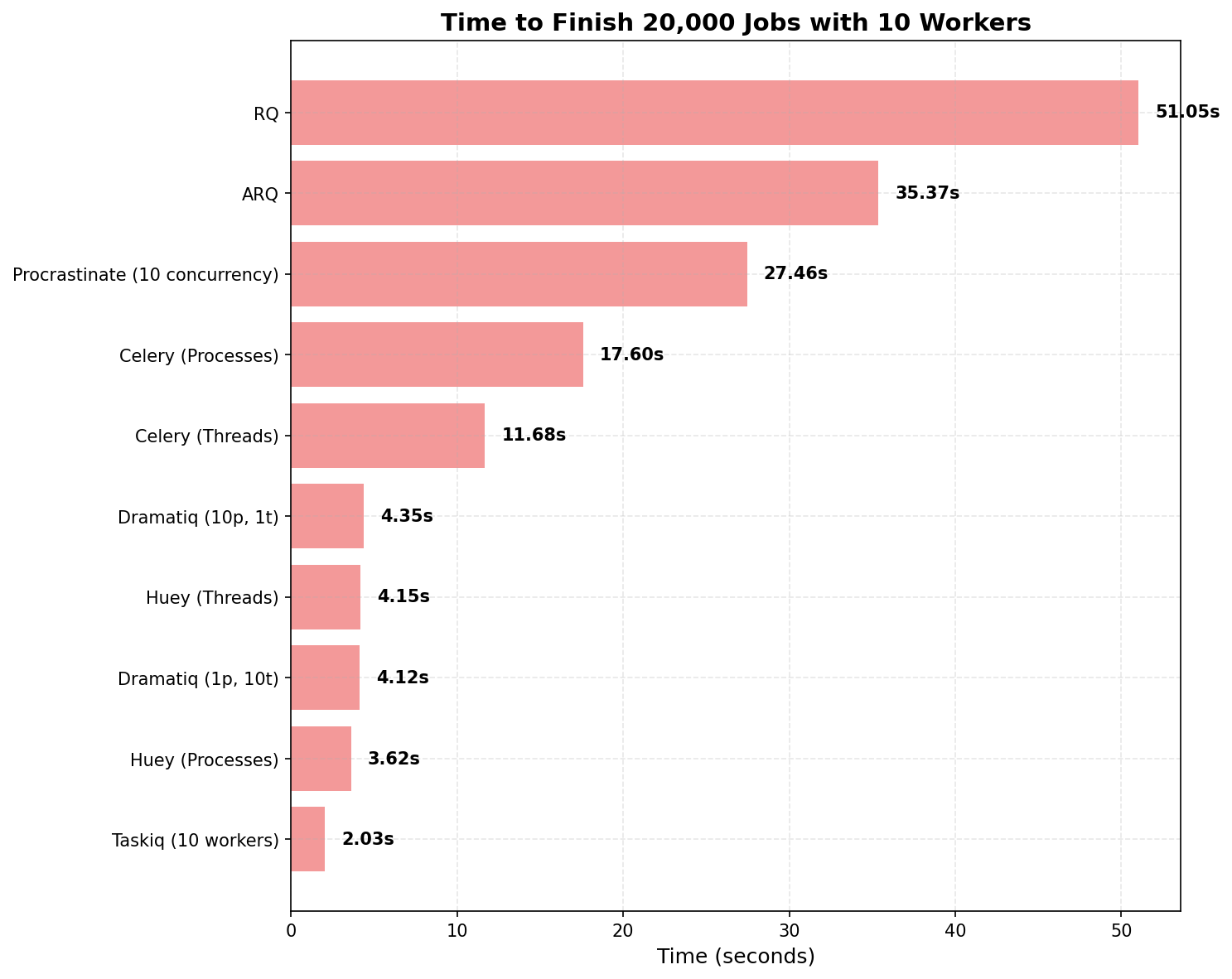

My main interest in this benchmark is to get a sense of how each library performs under a large load, handles concurrency, and scales. I measured the time taken to process 20,000 jobs with 10 workers. The benchmarks were run on my MacBook Pro 14 inch M2 Pro with 16GB of RAM, using Redis as the broker.

Although the benchmark uses no-op jobs that only print the current time, which isn’t practical for real-world scenarios, it still helps verify the overhead and efficiency of task queue management. In fact, 20,000 jobs probably shouldn’t be considered very heavy, I think at least 10 times more would be a better measure for a more serious stress test. In the future, I’d like to test with heavier loads and see how each library handles them.

You can find all the scripts and configurations for running the benchmark in this GitHub repository. I’ve included instructions in the README so you can easily replicate the tests. Alongside benchmarking the processing speed of each library, I also tested the speed of enqueuing all 20,000 jobs, although that was not the main focus.

Results

The final results for the time to finish processing 20,000 jobs with 10 workers are as follows:

- RQ: 51.05 seconds

- ARQ: 35.37 seconds

- Celery (Threads): 11.68 seconds

- Celery (Processes): 17.60 seconds

- Huey (Threads): 4.15 seconds

- Huey (Processes): 3.62 seconds

- Dramatiq (Threads): 4.12 seconds

- Dramatiq (Processes): 4.35 seconds

- Taskiq: 2.03 seconds

- Procrastinate: 27.46 seconds

Here is a related chart for better visualization:

As you can see, Huey, Dramatiq, and Taskiq perform exceptionally well, almost 10 times faster than Python-RQ. Both Python-RQ and ARQ struggle in this test. The worker pool feature in Python-RQ seems relatively new, which might affect its performance. ARQ doesn’t have any built-in feature to start multiple workers, so I used supervisord to start 10 workers.

Useability

Both Celery and Dramatiq have comprehensive documentation, but to me, the one from Python-RQ is probably the cleanest and easiest to read.

Python-RQ and ARQ provide an enqueue method to add the function and its parameters to the queue. Celery, Huey, and Dramatiq take a different approach by allowing you to decorate the job processing function. This approach extends the abilities to enqueue or configure the behavior directly from the function, which is quite neat. Among them, I found Huey’s approach slightly uncomfortable because it enqueues the function whenever you call the function.

From the consumer side, Celery’s CLI is the most mature. Dramatiq and Huey provide sufficient options to easily tune important settings around the worker, with examples and explanations that help understand the settings.

Taskiq supports Redis Stream as a broker, which is more reliable than using a Redis list. Redis Stream also simplifies the library’s implementation, avoiding many potential issues and headaches for developers.

Procrastinate uses PostgreSQL as its broker instead of Redis, leveraging PostgreSQL’s LISTEN/NOTIFY and FOR UPDATE SKIP LOCKED features. Several other libraries employ the same approach, including PgQueuer, pgmq, and solid_queue. I included it in my benchmark because it’s a cost-effective solution when the application already uses PostgreSQL as its database.